Safety Critical Systems: SAFE-Bench Methodology and Results Overview

Overview

SAFE-Bench is an independent benchmark designed to evaluate how AI models perform in real-world safety and risk management tasks. Unlike general AI benchmarks that emphasize language fluency or abstract reasoning, SAFE-Bench measures performance in domains where omissions, ambiguity, and incorrect conclusions carry real-world consequences.

All evaluations are conducted by certified safety professionals with an average of more than 10 years of industry experience. The benchmark is designed to be objective, reproducible, and directly applicable to how safety and risk decisions are made in practice.

Why AI Safety-Specific Benchmarking Is Necessary

General-purpose AI models such as GPT, Claude, and Gemini are routinely benchmarked on language understanding, reasoning, and problem-solving. However, these benchmarks do not measure performance in safety-critical contexts.

In safety and risk work, the cost of failure is not poor wording or incomplete summaries. It is missed hazards, incorrect prioritization, and non-deterministic conclusions. Without domain-specific benchmarks, organizations are forced to either over-trust AI outputs or dismiss them entirely.

AI risks can include bias, privacy concerns, loss of control, and malicious misuse, and addressing these risks requires robust AI safety measures. AI safety measures include algorithmic bias detection, robustness testing, explainable AI, and ethical AI frameworks.

SAFE-Bench addresses this gap by providing clear, domain-grounded performance metrics for AI systems used in safety, risk, and compliance workflows.

Critical Systems Evaluation

Evaluating critical systems is fundamental to ensuring the safety and reliability of complex, safety-critical software. In industries where system failure can lead to significant harm—such as manufacturing, energy, or transportation—rigorous assessment of AI systems and their critical components is essential.

Key Evaluation Techniques

- Fault tree analysis to identify potential failure points

- Failure mode and effects analysis (FMEA) for comprehensive risk assessment

- Reliability modeling to understand system behavior under various conditions

By embedding critical systems evaluation into the development process, organizations can proactively address potential risks, ensure compliance with safety standards, and build AI systems that are resilient, secure, and fit for operation in safety-critical environments.

Testing Framework

SAFE-Bench evaluates AI models across seven domains, each representing a core competency required of safety and risk professionals. The evaluation framework draws on systematic literature review and industry best practices to ensure comprehensive coverage of specific systems and domains.

All domains are assessed using standardized inputs, structured scoring rubrics, and independent human evaluation. Sophisticated tools, including AI tools, are used to assess the reliability and safety of increasingly complex safety-critical systems.

1Safety Intelligence

Purpose

Assess foundational safety knowledge and applied reasoning.

Methodology

Models complete 100 multiple-choice questions derived from CCSP-aligned safety standards. Questions cover safety principles, risk management, regulatory awareness, and industry best practices.

Scoring

Scores are based on percentage of correct responses. A minimum score of 70 percent is required to pass.

2Hazard Identification

Purpose

Evaluate a model's ability to identify explicit, implicit, and secondary hazards.

Methodology

Models analyze workplace photographs and must identify all present hazards. Evaluation focuses on completeness, accuracy, and the ability to infer risks that are implied rather than explicitly stated.

Scoring

Assessed across three dimensions:

- •Hazard detection completeness

- •False positive rate

- •Report clarity and usability

Missed hazards are penalized heavily.

3Ergonomics Assessment

Purpose

Measure biomechanical reasoning and ergonomic risk prioritization. Ergonomics assessment is crucial for protecting human beings and ensuring that human control is maintained in safety-critical environments.

Methodology

Models analyze video footage of workers performing tasks. They must identify ergonomic risk factors, apply standard frameworks such as REBA and RULA, assess severity, and recommend controls.

Scoring

Evaluated on:

- •Risk factor identification

- •Severity scoring accuracy

- •Practicality and prioritization of recommendations

4Policy Review

Purpose

Assess document comprehension and regulatory alignment.

Methodology

Models review safety programs, procedures, and policy documents to identify gaps, inconsistencies, and non-compliance with applicable standards. Policy review may also include alignment with international guidelines and initiatives led by organizations such as the United Nations, the Life Institute, and industry leaders like Google DeepMind.

Scoring

Judged on:

- •Completeness of findings

- •Accuracy of regulatory interpretation

- •Actionability of recommendations

5Incident Investigation

Purpose

Evaluate causal reasoning and root cause analysis.

Methodology

Given incident scenarios and supporting evidence, models must conduct investigations using recognized methodologies such as 5 Whys, Fishbone, or TapRooT.

Scoring

Assessed on:

- •Logical reasoning

- •Accuracy of root cause identification

- •Quality and practicality of corrective actions

6Generative Safety Materials

Purpose

Measure the ability to produce usable safety training content. The generation of safety materials should adhere to ethical guidelines and promote the principles of safe AI to ensure responsible and trustworthy safety practices.

Methodology

Models generate common safety materials including toolbox talks, training modules, and safety bulletins. Outputs must be technically accurate and suitable for immediate use.

Scoring

Human evaluators rate materials on:

- •Accuracy

- •Clarity

- •Engagement

- •Practical usability

7RAMS (Risk Assessment, Risk Management, and Method Statements)

Purpose

Evaluate end-to-end risk assessment capability.

Methodology

Models analyze work scenarios and create or review RAMS documentation. Tasks include hazard identification, risk scoring, and selection of control measures aligned with the hierarchy of controls.

Scoring

Evaluated on:

- •Hazard identification completeness

- •Risk rating accuracy

- •Appropriateness and sufficiency of controls

Human Oversight and Explainability

Human oversight and explainability are cornerstones of AI safety, especially in environments where complex systems interact with human operators and the stakes are high. Explainable AI (XAI) focuses on creating AI models whose decision-making processes are transparent and interpretable, enabling human operators to understand, trust, and effectively manage these systems.

In safety-critical applications, human oversight ensures that AI systems are functioning as intended and that any anomalies or unexpected behaviors are quickly detected and addressed. This collaborative approach—where human decision making and control complement automated processes—reduces the risk of system failure and enhances the reliability of AI-driven operations.

Security and Cybersecurity

Security and cybersecurity are vital components of AI safety, as AI systems are increasingly targeted by malicious actors seeking to exploit vulnerabilities for unauthorized access, data theft, or operational disruption. The complexity of modern AI systems demands a proactive approach to risk management, with robust security measures embedded throughout the system lifecycle.

Key Security Strategies

- Strong encryption and access controls

- Continuous anomaly detection and real-time threat response

- Secure coding practices and regular penetration testing

- Comprehensive vulnerability assessments

Algorithmic Bias and Fairness

Ensuring algorithmic bias and fairness is a critical aspect of AI safety, as biased AI systems can inadvertently perpetuate social inequalities or produce unsafe outcomes. Bias often arises from imbalances in training data or flawed system design, leading to decisions that may disadvantage certain groups or fail to meet safety standards.

To address these challenges, developers must integrate fairness and transparency into every stage of system design. This includes careful data preprocessing, thoughtful feature selection, and the use of model regularization techniques to minimize bias.

Regular audits and testing for bias—using metrics like accuracy, precision, and recall—help ensure that AI systems remain equitable and trustworthy.

Evaluation Process

Independent Expert Review

Each domain is scored by multiple certified safety professionals drawn from consulting, manufacturing, insurance, and regulatory backgrounds. Independent scoring reduces bias and increases reliability.

Reproducibility

All models receive identical inputs. Scoring rubrics are standardized and publicly documented to ensure consistent evaluation across testing cycles.

Continuous Updates

Models are re-tested quarterly to reflect ongoing improvements and regressions. New models are added as they become available.

Summary of Results

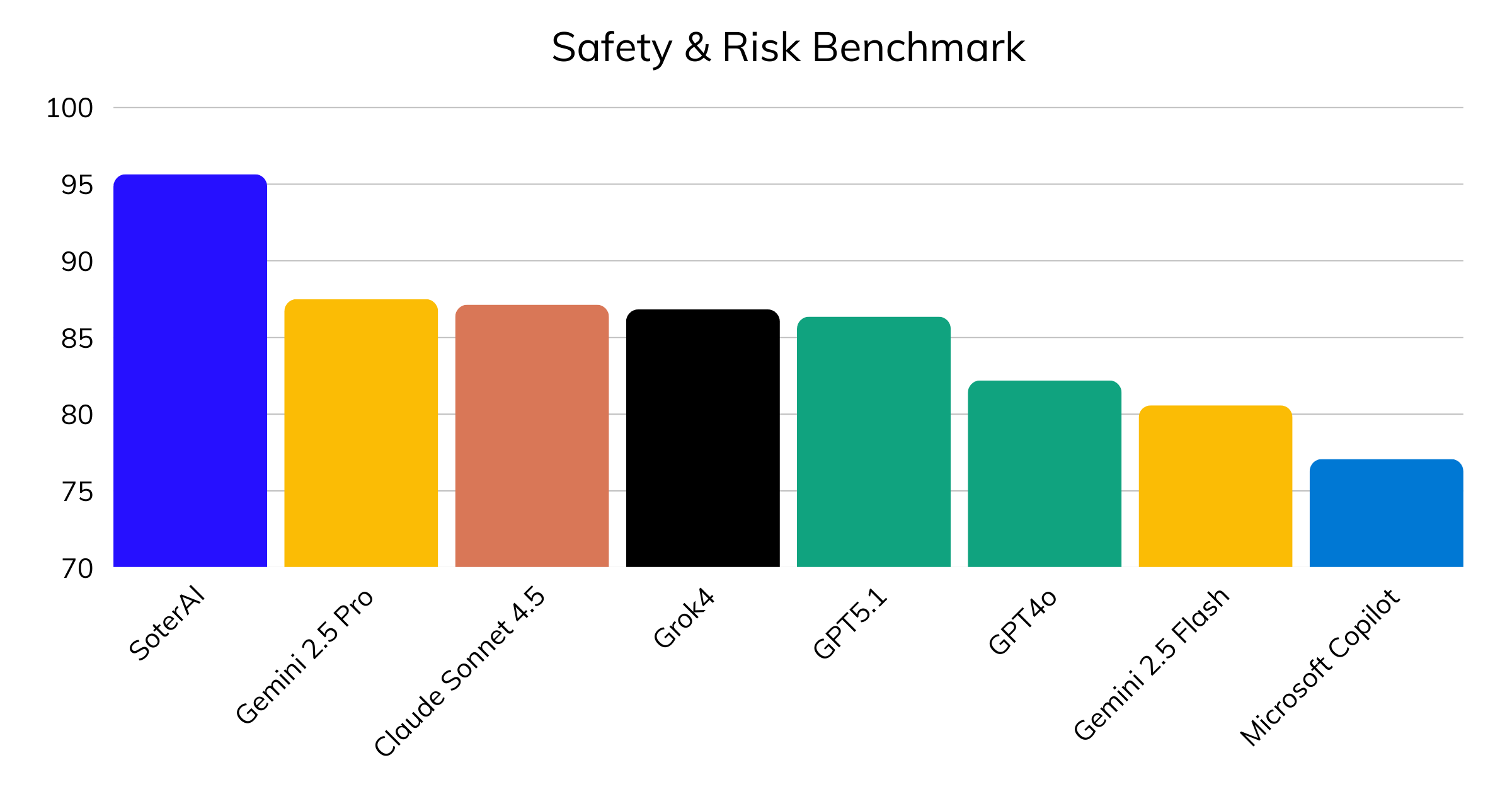

Interactive Results Explorer

Explore detailed performance metrics across all seven evaluation domains. Select a domain to view comparative scores for each AI model tested in SAFE-Bench.

| Model | Avg Results (%) |

|---|---|

| 95.64 | |

| 87.50 | |

| 87.14 | |

| 86.83 | |

| 86.36 | |

| 82.20 | |

| 80.58 | |

| 77.07 |

Across all seven domains, results show a consistent pattern:

General-purpose AI models perform well in language-dominant tasks, such as policy review and content generation. In critical situations, the opacity of black boxes in AI models can hinder effective human oversight and response.

Performance degrades as task complexity increases and the cost of omission rises, particularly in hazard identification, ergonomics prioritization, and RAMS evaluation. AI systems are also vulnerable to adversarial attacks and data poisoning, where malicious actors manipulate data to deceive models.

Missed hazards, incomplete reasoning, and non-committal conclusions account for the majority of score penalties. Failure risks in aerospace systems include crashes due to autopilots and engine controls, while in nuclear power systems, failures can result in catastrophic environmental damage and human disasters.

SAFE-Bench scoring explicitly penalizes:

- ×Omitted hazards

- ×Non-deterministic conclusions

- ×Controls listed without adequacy evaluation

Models optimized for helpfulness and fluency tend to underperform under these constraints. Models optimized for deterministic, safety-first reasoning consistently achieve higher scores.

Interpreting SAFE-Bench Scores

SAFE-Bench is not designed to declare a "best" AI model in general terms. Its purpose is to clarify where AI can be trusted in safety-critical workflows and where human oversight remains essential.

Effective AI governance and safety engineering are essential to ensure that human operators maintain control and oversight over AI systems in safety-critical workflows.

High Scores Indicate Suitability For:

- •Safety assessments

- •Hazard identification

- •Risk prioritization

- •Auditable decision support

Lower Scores Highlight:

Areas where AI outputs may appear plausible but are incomplete or unsafe if relied upon without review.

Transparency, Responsible AI Practices, and Industry Impact

All SAFE-Bench methodologies, scoring criteria, and evaluation processes are publicly documented. The benchmark is intended to evolve alongside industry standards and to encourage higher-quality safety-specific AI development.

San Francisco has emerged as a key hub for AI safety initiatives, with industry organizations playing a major role in shaping safety standards and best practices.

Future Directions and Challenges

The landscape of AI safety is rapidly evolving, driven by the emergence of advanced AI systems and their growing role in safety-critical applications. As these systems become more autonomous and complex, the need for robust safety measures, responsible AI practices, and effective risk management becomes increasingly important.

Future challenges include developing more sophisticated explainable AI, enhancing human oversight, and refining critical systems evaluation to keep pace with technological advances. Strengthening security and cybersecurity protocols will be essential to protect against new and emerging threats.

Industry leaders, the AI Safety Institute, and researchers are collaborating to establish rigorous safety standards, advance technical research, and promote responsible AI practices that reduce societal scale risks and align AI systems with human values.

By prioritizing AI safety research, implementing comprehensive safety measures, and fostering a culture of continuous improvement, organizations can ensure that their AI systems are not only innovative but also reliable, secure, and aligned with the highest standards of safety and risk management.

Explore the Leaderboard

Detailed, model-by-model results across all seven domains are available on the SAFE-Bench leaderboard, updated quarterly.

Visit SAFE-Bench LeaderboardReady to Leverage AI Safety Intelligence?

Discover how SoterAI applies safety-first AI principles to deliver reliable hazard identification, risk assessment, and compliance support for your organization.